March 14, 2025

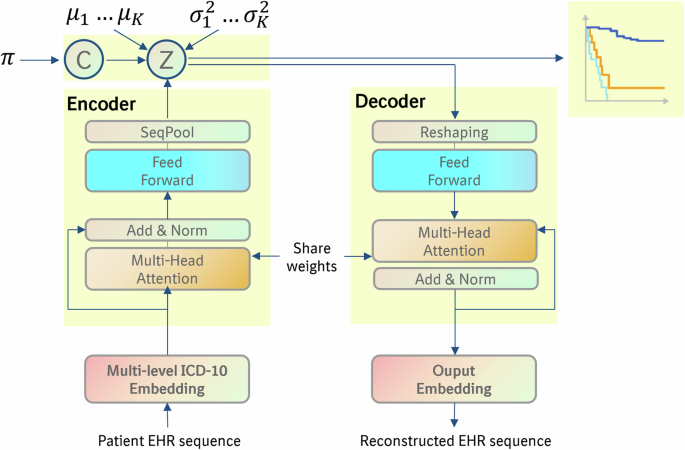

Why use decoders only (gpt) when we have full transformers architecture?

I was going through the architecture of transformer and then I Bert and Gpt, Bert is only using encoder and Gpt is only using decoder part of transformer , ( ik encoder part is utilized for classification, ner, analysis and decoder part is for generating text) but why not utilize the whole transformer architecture. Guide me I am new in this. submitted by /u/VegetableAnnual1839 [comments] Source link