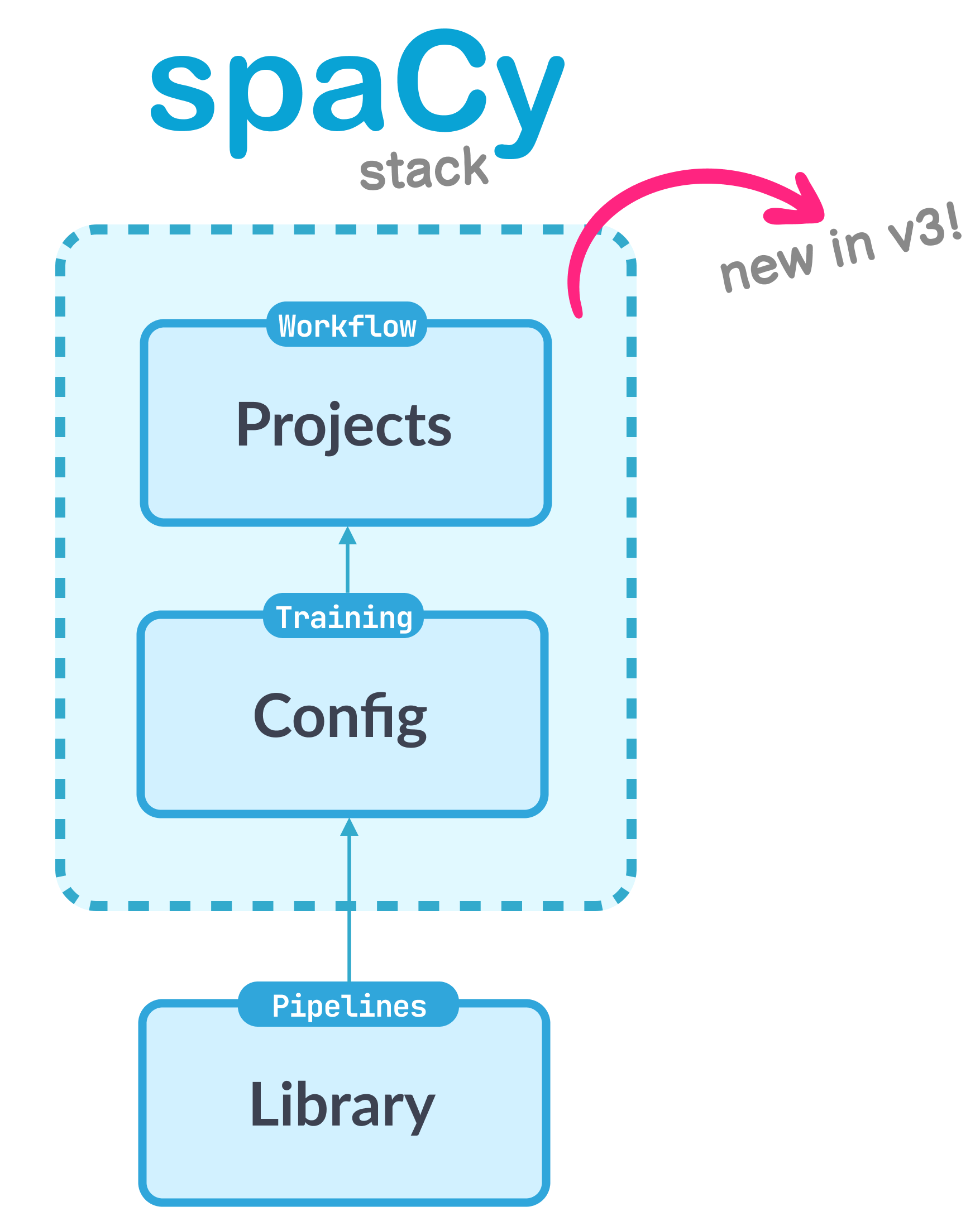

Machine Learning Engineers who turn prototypes into production-ready software

face difficulties with the lack of tooling and best-practices. spaCy v3, with

its configuration and project system, introduced a way to solve this problem.

Here’s my take on how it works, and how it can ramp-up your team!

I’ve been using spaCy for a few years now, as I did a lot of

NLP projects both during my studies and previous work. Now that I get to work on

it full-time after joining Explosion in October, I’ve been thinking a lot about

what I’ve liked in the library and how it has improved.

Back then, when I wanted to train my own NER model using spaCy v2, I’d write my

own training loop. Something like this:

A simple training loop for NER in spaCy v2 (excerpt)

with nlp.disable_pipes(*other_pipes):

for i in range(epochs):

random.shuffle(train_set)

batches = minibatch(training_data, size=64)

for batch in batches:

text, annotations = zip(*batch)

nlp.update(texts, annotations, drop=0.5, losses=losses)

Adapted from the book “Mastering spaCy” by Duygu Altinok

It was pretty good until I started handling multiple NLP projects: I would

rewrite the same code over and over again, teams would develop competing

standards of what goes into the loop, and third-party integration would become

nontrivial— it can get messy in no time!

Also, the snippet above is still incomplete— a full solution would be much

more involved. To complete my training loop, I have to write code to set my

learning rate, customize my batching strategy, initialize my weights, and so on!

With spaCy v3, you don’t have to write your own training loop anymore.

This year, spaCy released v3 and

introduced a more convenient system for NLP projects.1

You don’t have to write your own training loop anymore. Here’s how I think

about the “spaCy stack” now:

We’ll go through the stack in increasing levels of abstraction. First, we’ll

look into the

configuration system, and see how

it abstracts our custom-made training loop. Then, we’ll look into

spaCy projects, and see how it abstracts our

configuration and NLP workflow as a whole. Lastly, I’ll share a few thoughts as

a spaCy user and developer.

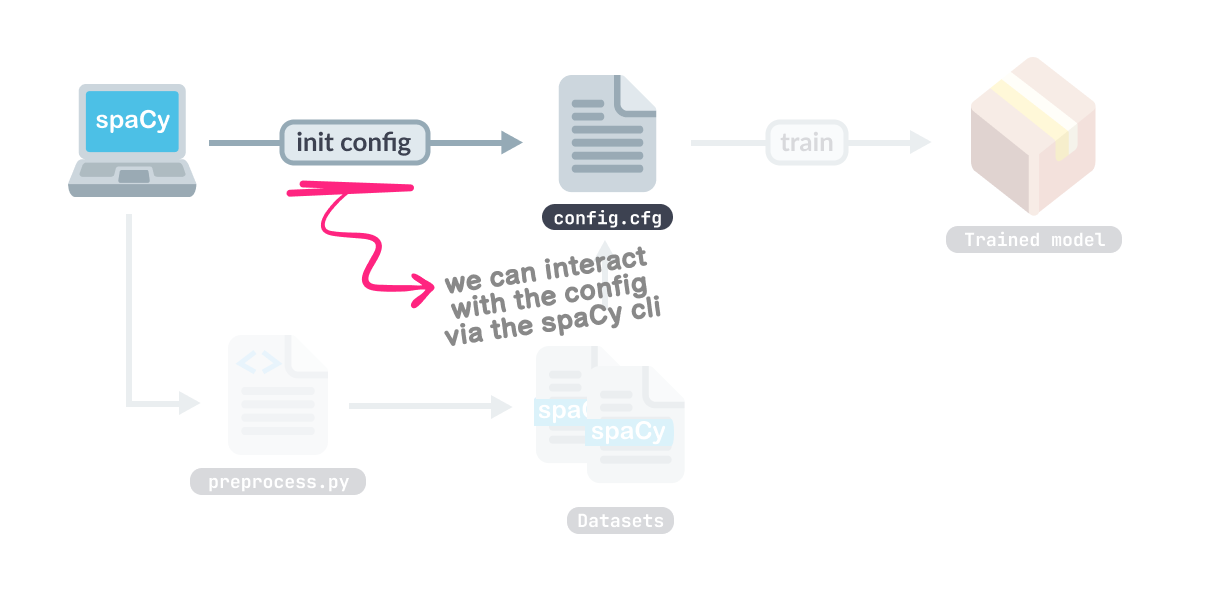

If I were to redo my NER training project again, I’ll start by generating a

config.cfg file:

Generating a config file for training an NER model

python -m spacy init config --pipeline ner config.cfg

Think of config.cfg as our main hub, a complete manifest of our training

procedure. We update it using any text editor, and “run” it through the

spaCy command-line interface (CLI).

Even if we don’t have to write our training loop, chances are, we still need to

write our data preprocessing step. But if our dataset follows standard formats

like CoNLL-U, then we skip this

step and just convert it with the

spacy convert command.

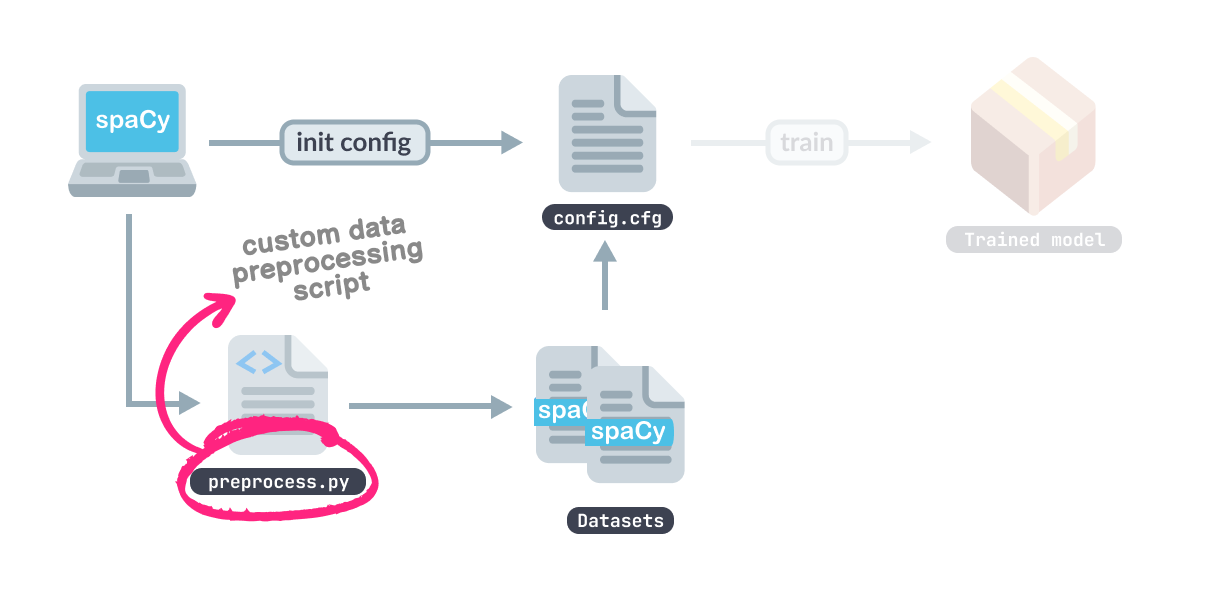

preprocessing script for serialization

Even if our preprocessing step is tightly-coupled to our dataset, we’d want it

to be saved as a DocBin object (with a .spacy

extension). Due to

spaCy’s efficient serialization,

saving our dataset into this format gives us the benefit of working on smaller

files. Below is a sample recipe adapted from

explosion/projects:

Converting dataset into a DocBin object (excerpt)

def convert(lang: str, input_path: Path, output_path: Path):

"""Convert a pair of text annotations into DocBin then save"""

nlp = spacy.blank(lang)

doc_bin = DocBin()

for text, annot in srsly.read_json(input_path):

doc = nlp.make_doc(text)

ents = []

for start, end, label in annot["entities"]:

span = doc.char_span(start, end, label=label)

if span is not None:

ents.append(span)

doc.ents = ents

doc_bin.add(doc)

doc_bin.to_disk(output_path)

We have now streamlined our process. Previously with spaCy v2, I’d have to save

my training data into an intermediary

GoldParse object. With v3, I only have to

think about my dataset’s Doc representation,

nothing more.

Once we’re done, we should have a .spacy file for both our training and

evaluation datasets. We can go ahead and provide the paths in our config.

Just to be sure, I’d also run debug commands

before training. My favorite is debug data: it informs me of invalid

annotations and imbalanced labels. Once everything checks out, training my NER

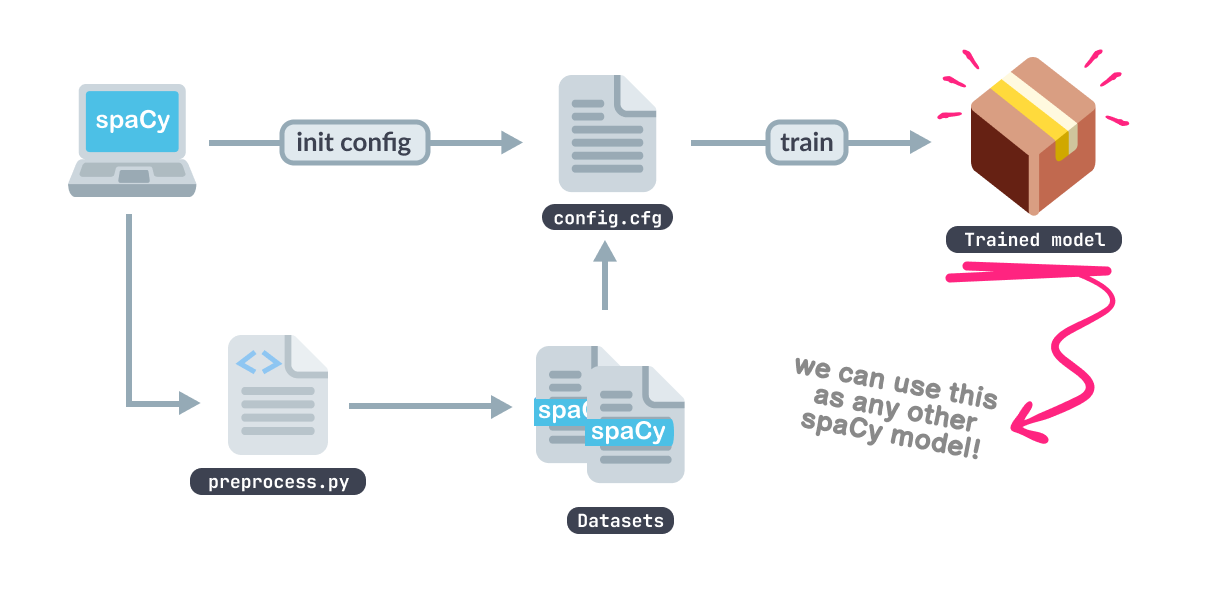

model becomes as easy as running:

How to train your model

python -m spacy train config.cfg --output ./output

After training, the ./output folder will contain our best model, and I can now

use it just like any other spaCy model. I could either pass the path to

spacy.load, or get some metrics by

using the spacy evaluate command.

And that’s it! Again, we don’t need to write our training loop anymore. It all

boils down to your config.cfg file and data preparation script, and the spaCy

CLI handles everything. For a quick overview of what runs under the hood when

spacy train is executed, check out the table below:

| Method | What it does | |

|---|---|---|

train |

The interface called when you run spacy train. Loads training configuration and validates inputs. |

|

train_while_improving |

The usual training loop that iterates on a batch of training data. Each iteration updates a language pipeline. | |

Language.update |

The actual update step of the language pipeline. Takes an optimizer and a batch of samples. |

Of course, the implementation details differ based on what’s written in our

configuration, so it’s still important to understand what goes inside our

config.cfg file.

How to manage your config

The config file presents us with all the options we need to successfully train

our model. Sure, it looks overwhelming at first, but the upside is that we get

all the relevant information in one place. With that, it becomes easier to

reproduce experiments, and trace differences across our models.

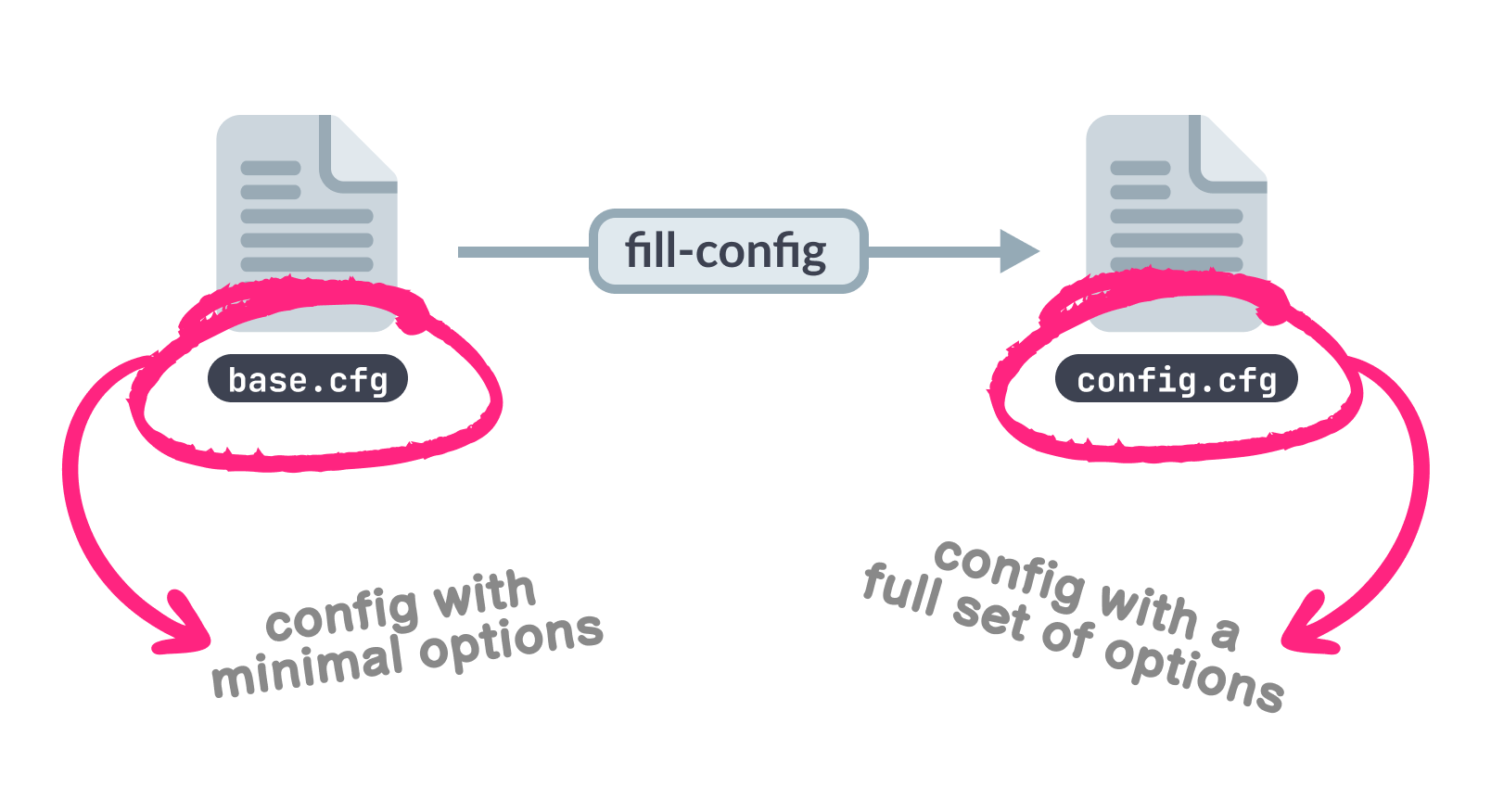

However, if you prefer looking at a file with a minimal set of options, then you

can create a base_config.cfg with the partial list you need, and fill it with

the fill-config subcommand:

When running spacy train, you should still pass the complete config. So even

if my base_config.cfg only contains batch_size (because maybe that’s what I

only care about for now) the resulting config.cfg will still include all

parameters to train our model.

However, be mindful during major version updates: the fill-config command may

differ and introduce variability in your setup, rendering it irreproducible. As

a best practice, pinning your dependencies can help mitigate this scenario.

How to understand your config

Having all parameters spelled out means that there are no hidden defaults to

secretly mess up our training. So whenever I come across an unfamiliar setting,

what I do is follow the breadcrumbs in the spaCy documentation.

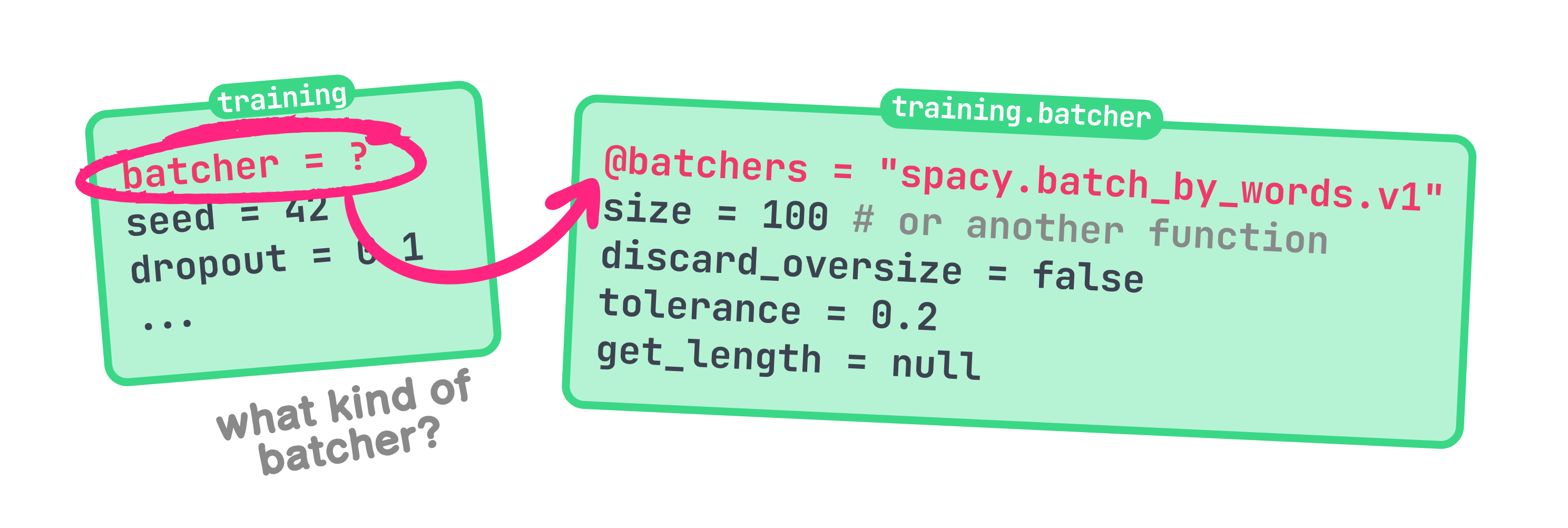

Take a look at this example. Let’s say I want to know more about the

compounding.v1 schedule in [training.batcher.size]:

Sample config file (excerpt)

[training]

seed = 42

gpu_allocator = "pytorch"

dropout = 0.1

accumulate_gradient = 1

patience = 1600

max_epochs = 0

max_steps = 20000

[training.batcher]

@batchers = "spacy.batch_by_words.v1"

discard_oversize = false

tolerance = 0.2

get_length = null

[training.batcher.size]

@schedules = "compounding.v1"

start = 100

stop = 1000

compound = 1.001

t = 0.0

Whenever I come across an unfamiliar setting, what I usually do is follow the breadcrumbs in the spaCy documentation.

The first thing I’d do is check the section it belongs to; in our case it’s

[training]. Then, I’ll head over to the

"training" section of

spaCy’s data format documentation. There,

we can view all available settings, their expected types, and what they do.

From our config, these settings may be located either in the [training]

section itself or in its own subsection. The latter just means that it can still

be configured further, especially when that parameter takes a function or a

dictionary. For example, if we zoom into the batcher option, we’ll notice that

it requires a function (or

Callable) as

its input. Thus, we assign it into its own subsection. There, we specify the

exact function we want to use for batching, in this case,

spacy.batch_by_words.v1.

so it earns its own subsection

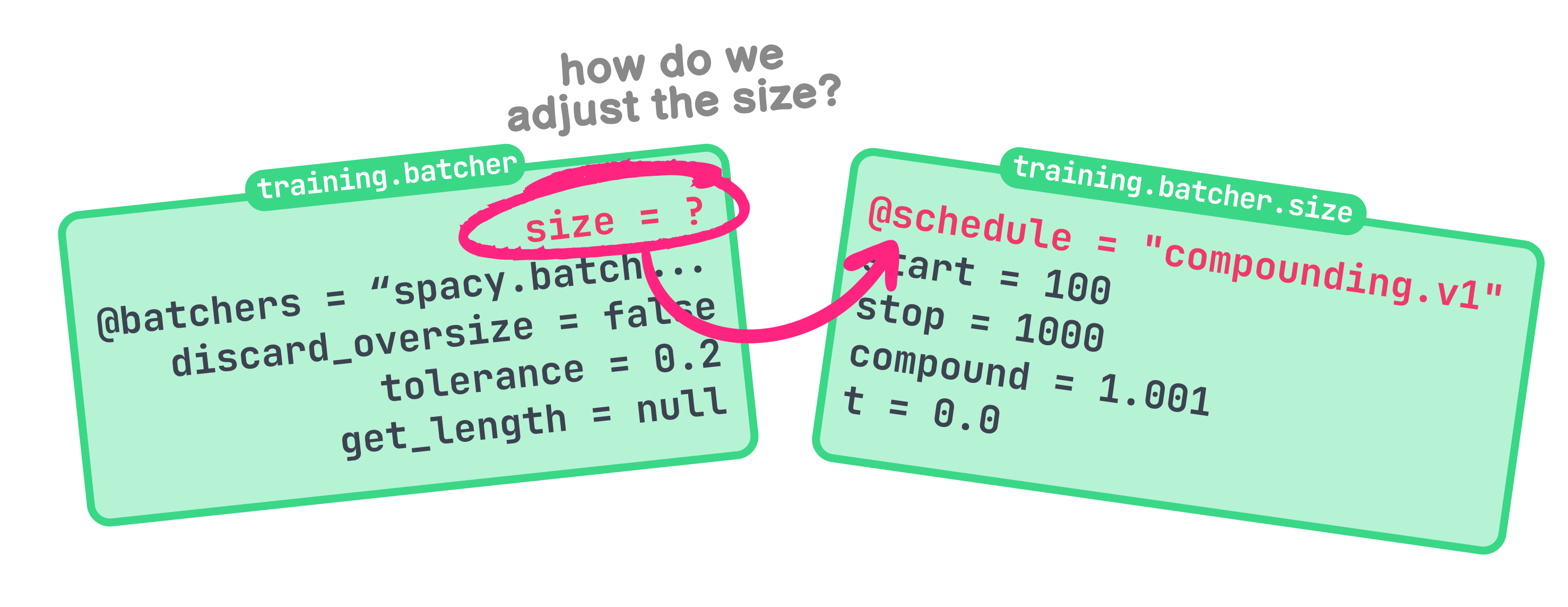

Now, we can go deeper into this batching technique. As a guide, I usually refer

to spaCy’s top-level API docs, especially the

batch_by_words section. Notice how

the pattern repeats: discard_oversize and tolerance can’t be configured

further, so we just snug them under the same subsection. On the other hand, the

size parameter can still be customized, so it gets its own. In fact, let’s say

we want the batch size to update according to a schedule instead of staying at a

fixed value. To do so, we’ll assign the

compounding.v1 function to

the size parameter and configure it appropriately.

update it based on a schedule, then we can do so within the config.

Notice that the way we traversed our configuration is from top to bottom: we

started with the main components of the training workflow (training), then

peeled layers of abstraction until we reach more specific settings

(compounding.v1). By doing so, we have also traversed the spaCy stack right

down to spaCy’s machine learning library, Thinc!

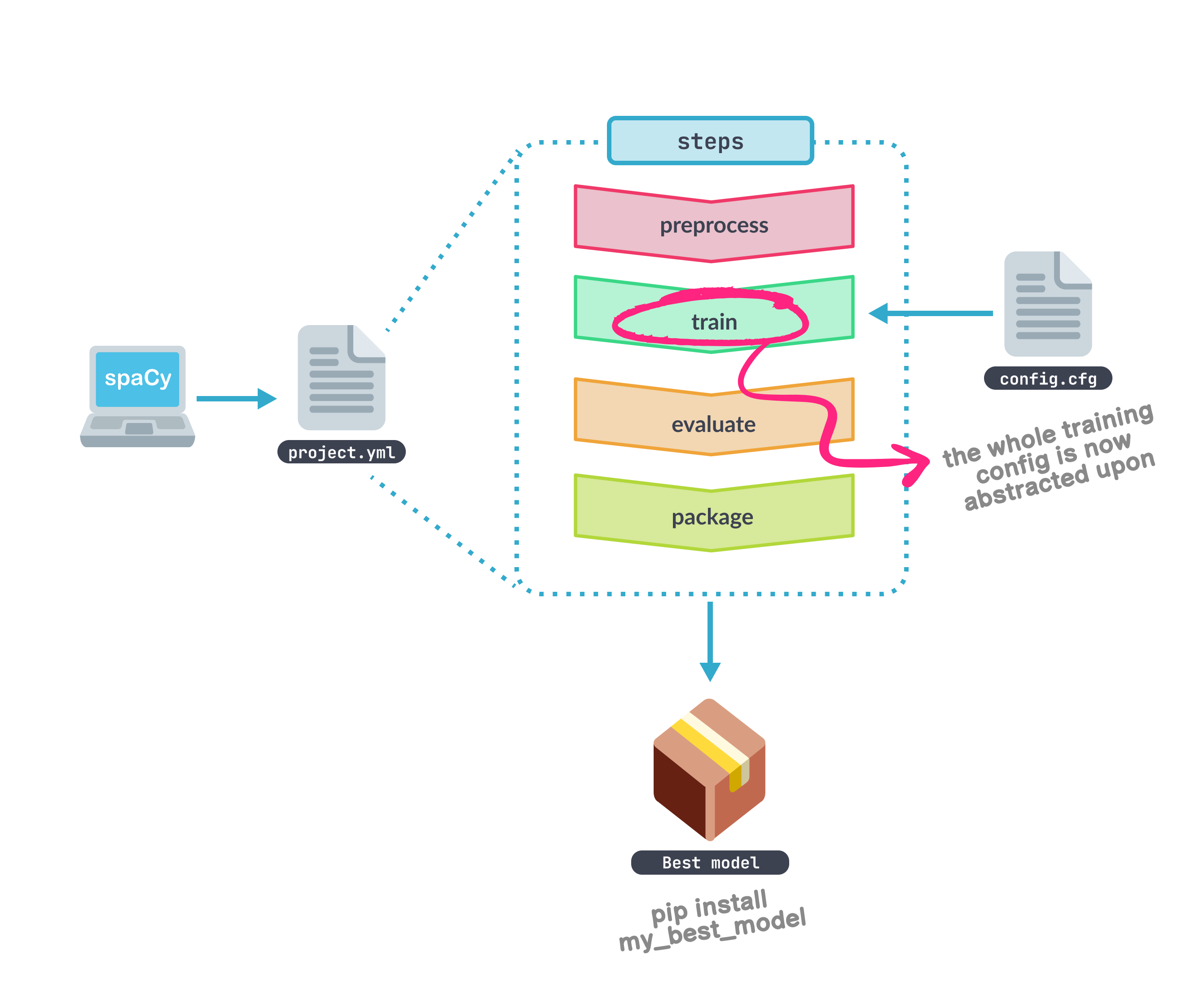

In the next section, we’ll move up the stack to

spaCy projects. If the config file governs

our training loop, then a spaCy project governs our entire machine learning

lifecycle.

spaCy v3 went the extra mile by introducing

spaCy projects, allowing us to manage not

only our training loop, but also our entire machine learning workflow.

Training is but one part of the experiment lifecycle. In production, we’d also

want to package our models, deploy them somewhere, and track metrics across

time. spaCy’s project system enables us to seamlessly do just that.

With spaCy v3, we can manage not only our training loop, but also our entire

machine learning workflow.

Also, I lied a bit earlier: if I were to start an evergreen NLP project, then

I’ll begin by cloning

one of the prebuilt projects for my

use-case. For my NER example, I’d use the

NER demo pipeline:

python -m spacy project clone pipelines/ner_demo

From here on in, everything is up to us. Our main point of interaction is the

project.yml file, where we define how our project will look like. Its

structure is akin to a Makefile, enabling us to define steps or workflows

beyond model training:

Whenever I define workflows, I usually start by outlining what I want to do in

the commands section before jumping right into the code. For example, in text

categorization, I may just have something like this:

Commands section in project.yml (excerpt)

commands:

- name: 'preprocess'

help: 'Serialize raw inputs to spaCy formats'

script:

- 'python scripts/preprocess.py assets/train.txt corpus/train.spacy'

- 'python scripts/preprocess.py assets/dev.txt corpus/dev.spacy'

- name: 'train'

help: 'Train the model'

script:

- 'python -m spacy train configs/config.cfg'

- name: 'evaluate'

help: 'Evaluate the best model'

script:

- 'python -m spacy evaluate training/model-best corpus/test.spacy'

Under the script, I usually write “dummy commands” that aren’t implemented

yet; it’s like scaffolding your project before building it. By planning how

my scripts will run beforehand, it’s as if I’m writing the human-to-computer API

first.

The project.yml file also allows us to explicitly define expected inputs and

outputs for each step using the deps and outputs keys. For example, the

evaluate command as shown below will require that a test set,

corpus/test.spacy, exists before running:

A project command with dependency checks (excerpt)

commands:

- name: 'evaluate'

help: 'Evaluate the best model'

script:

- 'python -m spacy evaluate training/model-best corpus/test.spacy --output

metrics/best_model.json'

deps:

- 'corpus/test.spacy'

outputs:

- 'metrics/best_model.json'

I take advantage of spaCy’s dependency checks: it gives me a clear

sense of what data or model goes in and out of each step.

By doing so, we can easily see our step’s dependencies and command chain. This

is one of my favorite features because I often get bitten by missing files or

hidden requirements. This also allows me to

push and pull artifacts into a remote

storage.

A top-down approach to problem solving: I can outline a big-picture view of my

project first, then work my way to its details.

The good thing about project.yml is that it supports a top-down approach to

problem solving: I can outline a big-picture view of my project first—

with all its dependencies and outputs— then work my way through the

details.

Lastly, it also has some conveniences like

automatically generating documentation,

and

integrations with popular tools.2

By using one of the available demo projects, it becomes easier to tap into the

larger machine learning ecosystem.

Final thoughts

The new spaCy v3 offers a lot outside of your standard NLP use-cases. It gives

better control of your training and end-to-end workflows. Of course, migrating a

project is not easy, especially if a dependency had a major change. There are

use-cases where spaCy’s config and project system work best, and I’d like to

outline them here:

| Use-case | spaCy version | Suggestion |

|---|---|---|

| Starting a new NLP project | use v3 | Start using v3 and take advantage of the project templates and config system. |

| NLP project in production and using the train config | v3 | Awesome! You can marginally adapt the project system, but of course if it ain’t broke, don’t change it. |

| NLP project in production with custom training loop | v3 | Try using at least the config system and evaluate the results with your current model as baseline. |

| NLP project in production, but using spaCy v2 | v2 | If possible, migrate to v3 (while being mindful of your model versions). Or seek help from Explosion! |

As usual, standard software engineering practices still apply: always pin your

dependencies, ensure your environments are reproducible, and

if it ain’t broke, don’t fix it.

However, if you are intent on making the jump, be sure to check out the

migration guide from the spaCy docs. The

old v2 docs are also up so you can reference them from

time to time.